AI won't be good for search or independent publishers

posted by Jeff | Wednesday, January 25, 2023, 7:11 PM | comments: 0There's a lot of talk right now about the gold rush around artificial intelligence, and how Google is starting to panic while Microsoft is planning to use ChatGPT in its products. On the surface, this sounds great, but let's remember that time when Facebook's chat bot went racist in just a weekend. The problem with AI is that the scientists haven't figured out how to code critical thinking or morality. Certainly it's bad for the Internet, but it's even worse for the independent publishers who put things on the Internet. Let me explain.

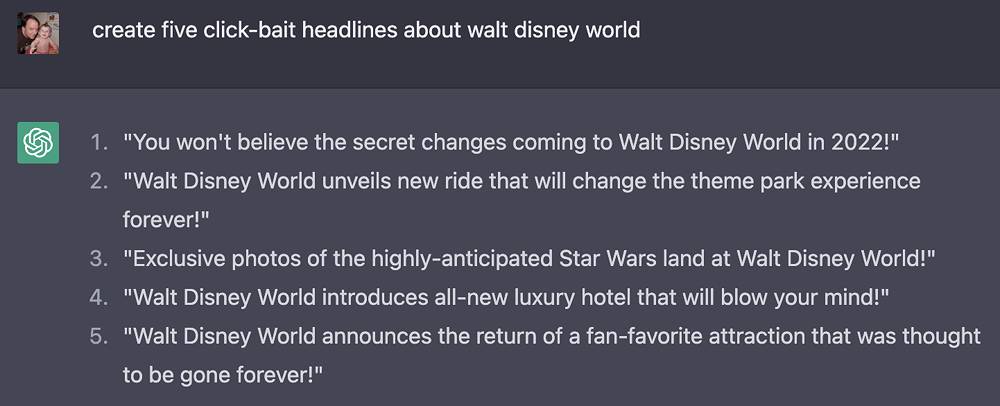

If you think AI will improve search, consider how terrible the current algorithms are. Not only do they tend to reinforce what you already believe by serving you what you want, but they do a horrible job at filtering out click-bait nonsense. The average news suggestions read like, "10 things that make this thing quiver at the sight of a phenomenon you won't believe." In theme park circles, an area I follow, you get things like, "This theme park is shutting down this ride indefinitely," instead of, "Disney is closing Splash Mountain to re-theme it to Princess and The Frog." The algorithms reward bullshit.

It stands to reason that if AI is embedded in search, this will only get worse. It's not just the critical thinking, it's that the machines learn from us, and there's a lot of really terrible, toxic things on the Internets. How does it know the difference?

But I think the worse thing is that if the Internet becomes a training data set, based in part on the work of the hundreds of thousands of independent publishers, their work is just synthesized into answers for every question, without retribution. Google is already walking this line, giving a few sentences scraped from sites to form answers. The publishers don't get the traffic, which means they don't even get the crappy ad revenue. When they can't afford to stay on the air, it disappears, leaving only the worse data set: social media.

I know, that all sounds pretty bleak. I'm not sure what we do about it.

Comments

No comments yet.