Archive: February, 2018

Photography and physics

posted by Jeff | Monday, February 26, 2018, 8:32 PM | comments: 0Phone photography has come a long way in the last few years. The sensors have gotten to the point where a lot of the quality is now deferred to the software in terms of color preferences, noise reduction and lately depth of field. I'll freely admit that I'm surprised at how far things have come, relative to the ability of SLR cameras. I wouldn't do engagement photos with a phone, but the quality gap sure has shrunk a bit.

The latest high end phones are doing tricks with depth of field using distance data and an algorithm. Using two cameras (Apple) or different pixels in the same camera (Google), they figure out how far away stuff is and blur it as if it were being viewed through a lens with an open aperture. The Google way of doing this is more or less the same way that Canon calculates auto-focus in its cameras (and it works really well, apparently). I think it's a convincing effect (see below, as shot on my Pixel 2), and most of the time it feels like something I would shoot on an SLR. Probably 1 in 10 times, something about it feels fake, or it didn't get a good distance read and something isn't blurry when it should be. Even in my sample, look at the woman's foot in the lower left.

Getting great results with shallow depth of field usually works best with a long lens. That's why sports photography tends to isolate the moment so well, because the background is often indecipherable. The algorithmic depth of field on phones mostly works with portraits of humans. It doesn't make sense in most wide angle photos otherwise. Even my sample below may not make the most sense. So the question then, in my mind, is can we achieve the "long lens" capability on phones? My guess is that it's going to be awhile.

You could probably argue that a phone can have enough pixels to allow field cropping as a zoom. There have been phones with a 40+ mega pixel sensor already, and in practical terms, for online use and even in prints, anything over 20 is probably overkill. But phones have lenses as wide as a 28mm equivalent to a standard SLR camera, which is already pretty wide. Assuming you have 40 megapixels to start with, going to a 200mm zoom equivalent, which is merely an OK zoom, would leave you with less than 10 megapixels to work with. My Pixel 2 starts with 12, so cropping that would get you down to something like HD video, which looks great in motion, and terrible as a still. Plus there are likely to be issues with the measured distance with so little data.

I theorize that the only way you make this work on a phone is to have larger sensors with more dense pixels, which from a pure physics standpoint eventually gets in the way of the amount of light you can collect, or, you find some way to make an actual, optical zoom lens more compact. The latter sounds like a really hard problem to solve. I have one of the less expensive versions of a 70-200mm zoom, and it has 16 separate pieces of glass in it, and it can't do wide angle at all. More pixels sound possible, but I don't know what the constraints are on the dual pixel scheme are. My armchair technologist opinion is that it would be a tougher nut to crack.

Maybe it doesn't matter. The cameras in my last three phones (Pixel 2, Pixel and Nexus 5X) have been so good that I'm not compelled to bust out the SLR's, or even my Lumix with the exquisite 12-35mm micro-4/3 lens, very often. I use the SLR's when I'm doing something quasi-pro like shooting a charity race, fireworks, or any night time exposure, because you need the manual exposure, or maybe a birthday party. I took the Lumix with me to Alaska (glad that I did), but for every cruise since it's been phone only. Every year I take more photos with my phone than the year before.

I'm naive to think that clever people will never figure out the science of putting SLR capability into a tiny package. Fortunately, even with the technological leaps we've seen, there is still a difference between "taking pictures" and "photography." That's pretty uppity of me to say, but let's just say that YouTube hasn't made everyone a filmmaker either.

Life is intense, but not always hard

posted by Jeff | Saturday, February 24, 2018, 9:00 PM | comments: 0I typically post a link to my blog posts on Facebook, which is where I get a lot of feedback from friends. When I posted 22 bandages, an account of how we deal with Simon's picking problem, there were a lot of expressions of praise and empathy. I greatly appreciate these gestures, but just as social media can often suggest that people live a perfect life, I don't want anyone to think it's all bad, either.

I would describe my life mostly as intense, and definitely not all bad. Yes, there are days that go by where I go to bed with nothing left, emotionally and physically. I don't imagine that this is particularly unique among parents, even with the ASD and ADHD challenges. Raising kids can be exhausting. I also have days where work, rewarding as it is, leave me ready to unplug and not think about anything technical at all. Those situations are intense, but certainly not bad. I'm amazed at how often we do great work with so few people.

I'm not at all a Type-A personality. I can't even relate to "those people." My BFF is very Type-A, and she plans a lot and checks boxes. I love her to death, but I couldn't roll like that. I need time where I do nothing, or spontaneously do stuff I had not previously thought much about. More to the point, I need to allow myself to be like that without self-loathing. As such, I've decided that my Saturdays, as much as possible, have no agenda.

Today I woke up, and for whatever reason, watched Oceans 11, because Clooney, Pitt, Damon, Mac, Cheadle, et. al., are the tits. I took a nap on the patio. I walked out to get the mail. I vacuumed upstairs. Watched another movie with Diana. Listened to tunes, had a fruity drink. Watched fireworks. Wrote a blog post. I had no intention of doing any of this... I just let it happen. I need at least a day a week to allow for that.

The hardest "personality adjustment" that I've made in the v2.0 of my life has been to both pursue a better life and person, while not feeling bad about the recharge and down time. There is something wonderful and calming about doing nothing, drifting off into a nap. I wake up feeling refreshed and awesome, with new ideas and excitement about big picture and minor things that happen every day.

Life is hard at times, but that's not unique to my life. The challenge is to not let the intensity blow out the chilled out times. It's OK to go at it hard, but let yourself do nothing. Let that time be productive in unexpected ways.

Our twisted view of what makes us exceptional

posted by Jeff | Thursday, February 22, 2018, 11:38 PM | comments: 0Among other things, the issues of gun regulation and healthcare get people fired up. What bothers me is not the positions themselves that people take, but rather the notion that the United States is too exceptional of a case to learn from any other country. There's no arguing that, perhaps, the American problems could be addressed by looking at the solutions elsewhere.

I'm a big fan of humility and self-awareness. It took me a long time, but at some point I realized that these qualities were ultimately the things that would allow me to be successful in my career. It is, and has been, harder to apply these to my interpersonal relationships, and definitely to my role as a parent, but dammit, I try. The humility reminds me that even if I am objectively doing the right things, I am open to improving. The self-awareness helps me know when I'm not exercising humility or I'm objectively doing the wrong things. In other words, I avoid viewing myself as exceptional, because doing so would short-circuit my ability to correct and improve.

We don't do that as a nation. To be fair, I don't think it's wrong to feel that we're exceptional as a nation, but again, doing so without humility and self-awareness is a blocker to improvement. So we look at the regulation of weapons in other countries, or the health care systems where there are better outcomes for less money, and we simply end the conversation with, "That wouldn't work here." For a nation that prides itself on winning, that sure feels like a defeatist attitude to me.

My sense of optimism often gets the better of me (this may be a self-defense mechanism), so I do think we have a lot of reasons to believe we're exceptional. We still make great things like electric cars, rockets and wooden roller coasters. Despite the current political climate, we have a radically diverse population and immigrants that come here to add to our collective ability. Our artistic contributions to the world are pretty good. Our science and technology often leads the world in innovation.

I'm not against flag waving, I just don't think it has to be boastful when we have so much room to improve.

Me and real estate, we have a complicated relationship

posted by Jeff | Thursday, February 22, 2018, 9:09 PM | comments: 0Found out today that the asshat of a buyer we had for our house bailed after he couldn't get financed. The asshatery isn't solely his fault, because frankly the lender strung him along for months with some now obvious issues they had to have known about.

So as we start over, that means having to pay the old mortgage for at least two more months, plus the HOA fee, and not having those proceeds means I can't roll cash into the new loan, and recast it so we're beyond the 20% mark (and thus no PMI). Every month we're not sold causes our net expenses to be about $2,200 higher than normal. On the plus side, we did work $2,800 in non-refundable deposits into the extensions, so at least we're covered for a month and change. However, this also causes a delay in the other projects we have, including solar on the new house. We were able to extend the lease of our Leaf (again), so we kicked that can down the road.

It seems like every other transaction is a pain in the ass. When I got my first house in 2001, I literally started with a second mortgage to put more "down" and beat the PMI. Yeah, lenders did that back then. Then there was the non-sale after we moved to Seattle from 2009 to 2011, which I've been complaining about for years and can't let go. Then after moving back into that place and departing for Orlando, we sold it in 48 hours in 2013. The next year I went through financing hell just because I was working as a contractor, and therefore had "no income." The financing for our current place got off to a difficult start, but in the end, they let me put down 11% with another entire mortgage. Now the other place doesn't sell, and once again, through no fault of my own.

My realtor is confident that we'll sell relatively quickly, because the neighborhood is sold out and we're not competing against new construction. I hope he's right. I'm mentally exhausted by this.

"I may have committed many errors"

posted by Jeff | Tuesday, February 20, 2018, 10:17 AM | comments: 0This Presidents Day, I couldn't help but think about the precedent and grace that George Washington established as the nation's first to hold the office. The end of his farewell address captured this perfectly.

Though, in reviewing the incidents of my administration, I am unconscious of intentional error, I am nevertheless too sensible of my defects not to think it probable that I may have committed many errors. Whatever they may be, I fervently beseech the Almighty to avert or mitigate the evils to which they may tend. I shall also carry with me the hope that my country will never cease to view them with indulgence; and that, after forty five years of my life dedicated to its service with an upright zeal, the faults of incompetent abilities will be consigned to oblivion, as myself must soon be to the mansions of rest.

Relying on its kindness in this as in other things, and actuated by that fervent love towards it, which is so natural to a man who views in it the native soil of himself and his progenitors for several generations, I anticipate with pleasing expectation that retreat in which I promise myself to realize, without alloy, the sweet enjoyment of partaking, in the midst of my fellow-citizens, the benign influence of good laws under a free government, the ever-favorite object of my heart, and the happy reward, as I trust, of our mutual cares, labors, and dangers.

Always think about whether or not the person you vote for can demonstrate this kind of humility.

22 bandages

posted by Jeff | Friday, February 16, 2018, 10:39 PM | comments: 0Tonight I helped Simon take off 22 bandages before taking a shower. As horrible as it sounds, it was 25 last time, so this is an improvement. The bandages cover the places on his skin that he picked until it bled, mostly on his forearms and lower legs.

I've been thinking about whether or not I would write about this for some time. I used to write about his autism spectrum disorder diagnosis quite a bit. There was something that seemed obvious about the path for that, even though ASD can encompass so many things and every kid can be wildly different. In Simon's case, it was clearly an issue with inflexible thinking, but socially he was still a loving kid and he didn't seem to be cognitively impaired. Even now, I don't feel like he isn't smart enough, but a double knot or shoe laces that won't stay tied are a show stopper, and seeing a word problem in school preempts him from attempting to solve it (I think this is because he sees it with pre-ADHD med eyes, not because of the ADHD).

But the ADHD challenge, and some of the things that may or may not be associated with it, are different, and harder to talk about. Still, I want to write about it and capture how I'm feeling at the moment, so I can refer back to it, but also because I know that parents have a way of finding this sort of thing and taking comfort in knowing they're not alone. So here we are.

I hate that we're medicating the kid without therapy, but we have little choice. There are no qualified therapists even remotely near us, and they're not covered by insurance. The pediatric psychiatrist that we do see is on the other side of town, collects a $50 co-pay each visit, and there are at best two or three people who do what she does in greater Orlando. That's frustrating because I'm not at all satisfied with her results, but we don't have a lot of choices. The school concentration drug prescription took some experimentation, but we have something that sort of works now (after a $300 DNA test explained what works and won't).

His concentration in school is better, but again, there are certain things he won't even attempt. We see this at home with the simplest things, but sometimes that might be because we've offered him shortcuts or accommodations. I don't think that's what's going on for school work, because if he knows he'll be penalized for incomplete work, the reaction is intense and emotional and crushing on his self esteem. It's not the reaction I would expect if he simply wasn't interested (something I was intimately familiar with in school).

In addition to the amphetamine he takes on school days, he's also on a med for anxiety (which was something initially prescribed so he could participate in therapy), and another one that kind of amplifies the amphetamine effects and might have reduced the picking. He's picked his fingers for a long time, the pads, not the cuticles, but at some point it started on his arms and legs. This is the most upsetting thing. I can roll with academic challenges and social contracts his ASD brain can't reconcile, but this is something completely different. We have him wearing long sleeves and pants at all times now, as well as some long tube socks so he can't get to his legs. Sometimes we have him wear gloves. This is only going to work so well in Florida, where it will be 90 degrees every day again soon.

His teachers the last two years are nothing short of saints for doing their best to help him. We've been pretty lucky for two years in a row. They've given insight to his social scene, too, which isn't ideal, because kids can be real dicks. He's kind of the "weird kid" at times, and being covered in bandages isn't going to make that easier.

Also, as an aside, the support of kids who have any kind of psychological challenge in public schools is completely inadequate. You could add a school psychologist to literally every public school in American for the price of 7 stealth bombers that the joint chiefs say they don't need, but you know, no one will touch that sacred cow.

I'm not looking for sympathy or attention with this, I just need to vent. Tonight, after peeling off all of those band-aids, I watched him in the shower and kept him on-task, making sure he didn't pick. I dried him off and immediately followed him to get his pajamas on (long sleeves/pants). I monitored his teeth brushing, then trimmed his finger nails and put a few new bandages on his worst fingers. I used Band-Aid® antiseptic stuff on all of his wounds, which will hopefully get a little breathing overnight. Then I laid down next to him until he fell asleep to make sure he didn't hurt himself anymore tonight.

Amazon Echo vs. Google Home: FIGHT!

posted by Jeff | Thursday, February 15, 2018, 10:49 PM | comments: 0We've been Amazon Echo people for more than a year. I bought one on a whim, and the some Hue lights and other stuff, and I got pretty hooked on all of that connected gadgetness. Before you knew it, we had five Echo Dots around the house. They're useful for shouting out a question about cups to ounces or whatever, and definitely helpful to turn lights on and off or tweak the air conditioner. But really, the killer app for me is the music. The little speakers don't sound all that great, but for the ten minutes you're in the bathroom, it's good enough. In my office, I have one plugged into my computer speakers, and in the living room, I have one plugged into the stereo.

In 2010, I think it was, Amazon opened up their music service to let you upload 250,000 of your own songs into their storage, for $25 a year. I put about 5k files up there. Truthfully, I did this mostly for the backup angle, but I was able to listen anywhere from a web browser. It wasn't until I finally flipped from Windows Phone to Android that I could start doing it on my phone, late 2015. Then the Echos put it everywhere in my house, and we were shouting at Alexa from all angles. They even baked it into the FireTV, which we already had.

Then, last year, they announced that the music "locker" service was going away, not counting anything you bought from them. It's still unclear what they mean though. They're not taking on new customers, for sure, but the messaging on Amazon's subscription page seems to imply that as long as you keep renewing it automatically every year, you can keep everything up there. So I don't know what exactly will happen, but it has me spooked. You might ask why I'm not just content to use one of the streaming services, and that's a fair question. Simply put, I want to own my stuff. If some artist doesn't strike a deal and their catalog disappears, that sucks. I'm possibly a relic from the old days of physical media (collectible as it was), and that's fine.

When me and my team won a bunch of Google Homes at the Intuit hackathon we did in November, I wasn't exactly sure what I'd do with the speaker, but I did plug it in and cast some songs to it. I was surprised at how good it sounded. When the Amazon announcement came, I found out that Google let you upload a bunch of music for free into their service, so I tried that out. The phone app is way better than Amazon's offering, so at the very least it seemed like a compelling alternative. I don't have a ton of playlists to convert, so jumping ship would be fairly low friction.

The freebies didn't end there. When we ordered Pixel 2 phones, (in addition to $200 in Project Fi credits and $395 in trades for our phones), we scored free Google Home Mini's. So all of a sudden, we're at system parity with Amazon. I had to buy a $35 Chromecast to make the music to my receiver work (the Home Mini won't send audio via Bluetooth to the receiver the way the Echo can), but that fills in the YouTube gap caused by the nonsense between Google and Amazon over FireTV. Here are some comparisons between the two ecosystems.

Music

As I said, Google has the better music app over Amazon, at least on Android. The web app is even better, and makes it super easy to cleanly edit metadata when necessary. The matching algorithm had split some albums on album artist for some reason, but it didn't take long to resolve. Playlist editing is better on the Google UI's as well. And of course, if you're a new customer, you can't upload anything to Amazon's service anyway.

Related, the sound of a Google Home Mini is vastly superior to an Echo Dot. I'm sure a full-size Echo sounds good, but the Dot model isn't much better than a cell phone. Not a big deal when it's answering commands, but music should be better. It's still not going to impress audiophiles or anything, but it's pretty solid for that pre-bed ritual.

There is a snag today. Some albums won't play on the speakers, saying I need a subscription even though it's in my library. Others will play, but skip most of the tracks. I used the chat on a help page, and apparently there's a known issue that they're working on. The support agent promised to call me tomorrow, if you can believe that. Playlists work fine.

Home Automation

There isn't a ton of difference here. Hue responds faster on Google than Amazon for some reason, if that half-second matters to you. Google doesn't know how to do Hue scenes, which the Echo treats as devices.

Configuration App

The Alexa app and Google Home app have similar card systems that are generally useless, but Google's is still better in the way it allows you adjust volume and change settings on devices. It also allows you to direct output of media to different devices, so you can have your living room Home Mini output sound to the Chromecast, for example. It's also just laid out more logically, with "devices" a top level skill.

Assistant Stuff

Alexa can read your calendar from Office 365, Gmail and G-Suite, whereas Google can only do Gmail. That's a huge failure if that's important to you, especially because it won't do G-Suite, which is where my stuff lives. Figure that out... Amazon can do it, but Google can't do it and they own the product. Beyond that, it will do the usual kinds of reminders and alarms and such. Amazon technically wins there though, because you can have Alec Baldwin wake you up, and that's amazing.

Video

This really comes down to control issues. FireTV comes with a remote and a full on user interface to navigate Amazon offerings as well as third-party apps like Netflix and Hulu. If you buy movies digitally, it almost doesn't matter where you do it now that all of the services tie in to Movies Anywhere, despite a few studio holdouts. The FireTV is also the conduit to all of the free stuff that comes with Prime. Some of it is pretty damn good, especially the original stuff.

The Google world centers on the Chromecast, which is like a FireTV but without the apps or a remote. Instead, you use the apps on your phone to "cast" stuff to it. This is a sweet arrangement in a lot of ways, because there's no pairing to do as with Bluetooth. I can browse and push music to a speaker with my phone (and using either Google Play Music or Amazon Music, no less), which is better than trying to shout out the name of the album you can't remember. But for video, I'm not a huge fan. I'm just running down the battery on my phone doing complex browsing instead of arrowing a cursor around. Obviously I don't get the Prime exclusives, but at least I get the YouTube back, which we mostly use for rocket launches.

Summary

For music, as long as they get the album problem worked out, Google wins hands down. The apps are so much better, and the little speakers sound better. Video is better with FireTV. The home automation and peripheral stuff is all kind of a draw. At this point, it doesn't cost me anything to have both, but the music situation is the one I care most about.

Oh, and before you offer some freak out paranoid rant, seriously, I have no shits to give about who might learn when my lights are on (hint: when it's dark) or when my air conditioning is running (hint: when it's hot). I realize that there are some privacy implications and trust issues around the use of data generated by the gadgets, but the very fact that I'm blogging about it in detail should give you some indication about why it's not that important.

Social media, and how the Internet was supposed to be

posted by Jeff | Saturday, February 10, 2018, 11:58 PM | comments: 0I'm not sure when the term "social media" was coined, but it didn't seem to really get tossed around until it was clear the Facebook and Twitter weren't going away. It just feels silly because long before these institutions were founded, we had things like AOL Instant Messenger (RIP), Usenet, countless forums and even Live Journal. Heck, my idea for Campus Fish predated Facebook by several years, and even targeted college kids (I mistakenly thought they'd pay for a place to post a blog and photos, though in my defense, this was pre-Napster). My point is that getting out among people in a virtual sense was hardly something invented by the big services, they just refined it and hit the critical mass first.

Before the days of ubiquitous connectivity, which started to really come into its own with the second iPhone and public WiFi starting to appear all over, the Internet was largely tied to desktop and laptop computers. The gateway was the browser. There was something fantastic about the fact that popular sites were not made by any of the "old" media companies. Every niche had its own home-grown players. Sure, Google started owning search early on, but the best stuff often came from recommendations from friends. Anyone could win on the Internet, you just needed something compelling that people would keep coming back for. There was even a rich system of companies anxious to sell advertising on your behalf. I paid my mortgage that way for a long time.

Then certain players emerged as bigger winners. Facebook and Twitter were the biggest in terms of mind share and engagement, even if they didn't make money at first. Google won not only as the gateway to everything, but also started owning an alarming portion of the ad market. For a lot of people, Google and Facebook are the Internet. The mobile transition made this even worse, with apps and shells in the mobile operating systems walling you off from the Internet at large. Entire industries cropped up around trying to game Google to make sure your thing showed up first in the search results. YouTube, part of Google, became the de facto Internet TV station.

With these established platforms, we've seen another strange phenomenon: People who get famous for no particular reason. I've been seeing these stories lately about this Logan Paul guy and the stupid shit he's been doing on YouTube, apparently making a ton of money. It has caught up with him, in that Google is suspending his pay for violating "community guidelines" or something, but he's still defiant and doesn't appear to be learning anything from the experience. How weird is that... a person with no life experience or clue about how to conduct yourself as a contributor to society makes money for that inexperience. That's what our culture is rewarding. (Which you already know... we elected a morally bankrupt reality TV star to the White House.)

The promise of the Internet was that it was the great equalizer. Anyone could make something and be the next big thing. What happened in practice is that a half-dozen companies are the platforms, instead of the Internet itself being the platform. And the stuff that has risen about has in many ways been of the lowest possible quality. That's discouraging, but considering the way reality TV took off even before the Internet became a daily fixture in our lives, I guess I'm not surprised. The most frustrating thing about that is that it's never been cheaper or easier to respond to popularity. It once cost me a grand a month to keep my little hobby sites on the air. Today, with half that amount, I could scale up to accommodate massive traffic with some button clicks if I had to.

It's not all bad. The ability to raise money for non-profits has become extraordinary. Ubiquitous connectivity means the death of locally installed software, and software-as-a-service is what I do for a living, competing against incumbents with inferior product. Buying basic essentials doesn't even require making lists and going to a store, as you can just push a button on a refrigerator magnet to get more soap delivered. Connected devices figure out the most efficient way to heat my house and put my lights on timers that I can turn on from anywhere. I can talk to my car and it maps out directions on a 17" touch screen. I even bought that car without having to fuck around with a morally questionable dealership. The Internet has without question made life better.

I'm not sure what we do about it, but the difference between me and the people who watch this Logan Paul guy is that they watch him and I don't. Despite the fact that the Internet has almost everything to do with my professional success, it's not a substitute for real human interaction and meaning. Social media is great for keeping in touch with the friends I've made all over the world, but at the end of the day, I want to hang out with my wife and kid, people in the neighborhood, real-life friends. When I do consume electronic media of any kind, it has to have some value to it beyond, "That's popular." Why have we lowered the bar so much?

My going theory is that nothing has really changed in terms of human social behavior, it's just more obvious and pronounced now because the cost of surfacing it (loudly) is so low. That's why I'm annoyed when people make some kind of "kids today!" comment. I'm still not convinced that any generation is worse than the previous.

Two stressful things

posted by Jeff | Saturday, February 10, 2018, 6:19 PM | comments: 0In November, I downloaded Chip Gaines' book Capital Gaines as something of an impulse buy as I boarded for a cross-country flight. It's pretty light reading, and while he seems like someone who you'd want to have a beer with, you wonder if anything ever gets to him. His life advice is a little flowery, but the wisdom of his and Joanna's decision to quit doing their show Fixer Upper is pretty solid. They believe that you only really have bandwidth to do two things really well. They had the show, their business and their family, and something had to give.

I think he's right, but I would extend his philosophy to suggest that you can only effectively deal with two stressful things at a time. I feel like I'm coming up for air. I've had three situations: An impending product launch at work, delays in the closing of my house sale and a kid wearing 25 bandages because he can't stop compulsively picking holes in his skin. The product launch happened, and I'm hopefully closing in a week or so. The hardest of those situations is still with me, but with the others subsiding, I feel like I can cope.

So if you've got more than two big stressful things affecting you, what do you do about it? We can control the things we go full-on into, but the things that cause us stress are often things we can't abandon. You know, like our child. I try to make time to do stuff that is more me-oriented, and get physically away, but this is hard. As fond as I am of saying, "We all make choices," I don't always follow through.

You don't need permission to exercise self-care, but you can tell others that you need it. That's where I often have a hard time. I need "me time" and instead of asking for it, I start to resent everyone around me. That's certainly not healthy. You also need to make it a frequent practice, maybe even schedule it, if it helps. I for one tend to consider vacation time my self-care opportunities, but sometimes I go months in between. Heck, last time I didn't even go anywhere, and I just spent the week at home.

If your stressful thing count is over two, take a break. You won't feel better by wallowing in your stress.

American inspiration comes from making things, together

posted by Jeff | Tuesday, February 6, 2018, 10:58 PM | comments: 0Today was a big day for SpaceX, as it successfully launched its Falcon Heavy rocket, a device that can lift twice the mass into space at half the cost of its nearest competitor. If that weren't enough, they then landed two of the boosters, simultaneously, which is awesome in part because they had already been flown previously. The third booster failed to light two of its three engines to land on the drone ship at sea, but the cargo is now headed into an elliptical orbit that will intersect the Mars orbit at the far end. That's a whole lot of achievement for a test flight.

Falcon Heavy is a thing because there are 6,000 people working at SpaceX to make it happen. They're led by Elon Musk, an immigrant who changed the way we exchange money (PayPal), drive cars (Tesla), power islands and our homes (also Tesla) and now put stuff in space. In an era where it seems like America exists only to consume and not to create, to put down instead of lift up, we have this industrialist who continues to change the world and prove that we can in fact make things while making the world better. Today an American company put a sports car in space (instead of something generically heavy) just to prove it could be done.

I don't have much in common with Elon Musk, except perhaps my overly optimistic timelines. For the last year and change, I've been leading product development at a startup that is growing very quickly now. My initial mission has been to get it to a place where it could scale and be maintained, and it took longer than I expected.

Today, we put a customer on that new platform. It was a big day for us, too. When I started that effort, I decided we should code name the project "Gemini," because it was the second big release for us, just as it was the second major era of human spaceflight for NASA. We don't have 6,000 people working on it, but we do have around a dozen. We're not changing the world, but we're definitely changing the lives of our customers in ways that excite them every day. The impact is powerful and exciting. We're making things.

It seems like hate dominates our political and cultural discourse these days, and gets to be a drag. That's why I find the SpaceX story, and indeed my own, to be a source of inspiration. I've written many times about intrinsic motivators, the real things that compel us to achieve things. Spectacular outcomes are certainly one of those motivators, but so is the opportunity to work with excellent people. It's my favorite thing about my profession, that I get so many chances to work with smart people from all over the world.

Ask yourself, every day, "What am I doing to create something great?" It's not all on you alone. Surround yourself with great people, encourage them and they will inspire you. That, to me, is the American dream.

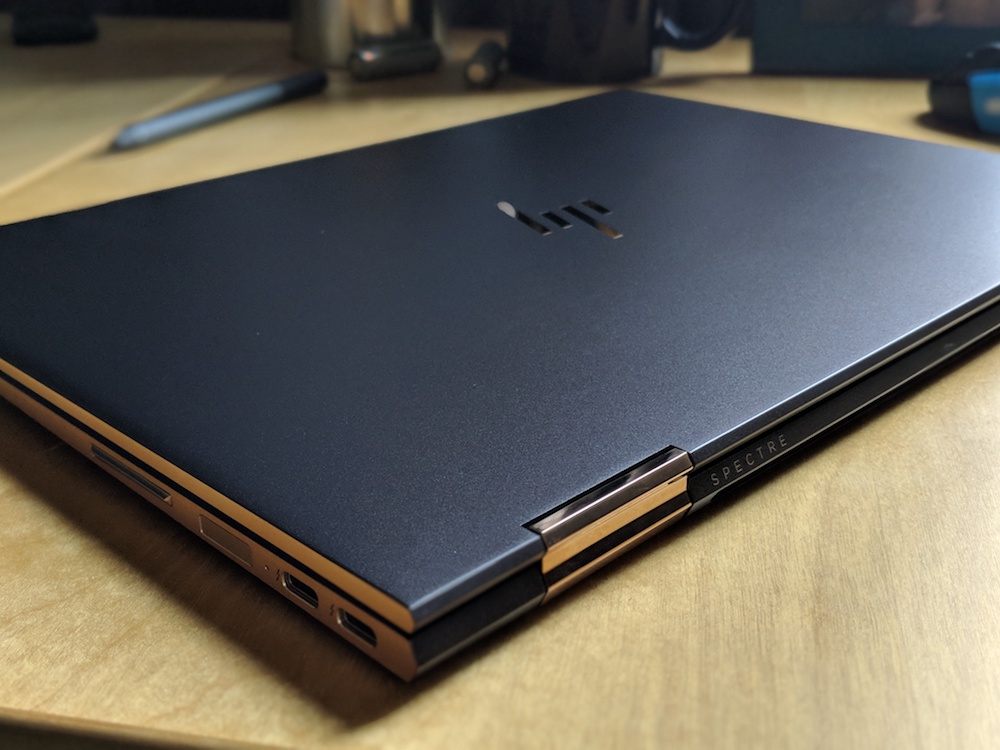

Review: HP Spectre x360 laptop (13", late 2017)

posted by Jeff | Monday, February 5, 2018, 1:30 PM | comments: 1(If I'm reading the Internets right, the laptop I bought is the third iteration of HP's convertible 2-in-1 laptablet thingy. For clarity, it's the one that has an i7-8550U CPU, the newest iteration of Intel's 4-core mobile thing. I'll also add that this review is largely from the perspective of a developer who is Windows-centric in terms of tooling.)

I wrote about this previously, but I had an extraordinary run of 12 years of Apple laptops. Going Intel was the thing that made me an Apple convert, because I always liked OS X (now, er, again MacOS) as a daily driver. It meant that I could do regular computer stuff, before smart phones took off, on a better OS, and then run Windows when I had to do work stuff. In fact, it might be kind of weird that for a dozen years, I was using Macs for all of my development work, even though my career has mostly revolved around a platform that was Windows-based. (I say mostly because technically, the latest bits run on most any platform, despite being from Microsoft.) Virtual machines got it done, and will continue to do so for the foreseeable future on my desktop iMac, which is what I use for work, remotely.

Funny though how my computers are running a parallel to phones, just in a slower sense. My last iPhone was a 3Gs, before I flipped to Windows Phone for four years, then Android. It's not that I had anything against the iPhone, and iOS has still been the dominant tablet platform at my house. It's just that the phones had a short update cycle and were way more expensive than most options on the WP and then Android platforms, without being worth the premium. That wasn't the case with laptops... spec for spec, the Apple laptops were better hardware at the same price point. That was the case when I bought my last MacBook Pro back in 2014, and frankly it was the only choice with a high resolution ("retina") screen. I love me some pixels, and having text that looks "painted on" the screen.

My last MBP came out in late 2013, so it was 4-year-old technology. It only had 8 gigs of memory, meaning dev work was contained to a VM that had only 6 gigs. In terms of raw performance, it was still adequate, but hitting 6 gigs and swapping memory to disk came fast in a development world where you're using all kinds of distributed computing on a local environment. I needed more memory. A 16 gig MacBook Pro was well in excess of $2k, which is too much. The reviews for the latest iteration of HP's 13" Spectre x360, with 16 gigs of RAM, a 512 gig SSD, the latest i7 CPU, a 4K screen, pen input and tablet mode clocked in at $1,400 on sale at Best Buy. There's no universe in the Apple world that comes even close to that. They're not even using the new CPU parts yet.

Build quality

I don't know what the materials are that this machine is made from. It's some kind of metal, and unlike the really nice Dell machines made for the last few years, it feels metal. I got the "dark ash silver" color, which is not silver in any way. You know the "antique bronze" that's all the rage in door knob, lighting and kitchen faucet hardware? It's that color, with the more gold-ish color on the sides and trim. It's really lovely, and not feminine like the rose-gold that Apple is doing. It feels very solid in every way, which is no small accomplishment with a machine where you can flip around the screen all the way back to make it a tablet.

As a laptop, the palm rest is large enough to be comfortable, and it doesn't have the sharp edge that my MacBook Pro had, irritating your wrists. I worried that the touch pad should be taller, but it has felt totally right, and it has a bona fide mechanical click to it (another thing the newer Macs don't have). The fingerprint sensor, one of several ways to login, is on the side, right where you'd pick up the machine. Everything about the machine looks and feels premium. When I think about the last HP I had, circa 2005, it's a night-and-day difference.

Connectivity, hardware and accessories

USB-C is an awesome thing. I've had it as my phone charging and accessory port now for more than two years. On most laptops, it's even better, because they're pushing the data bandwidth of Thunderbolt 3 over it. So you have the cable spec that can push up to 100 watts for charging (included charger does 65W), and data transfer that's four times the speed of USB 3.1. You can connect a high-end graphics card in an external enclosure if you need to, which is intriguing if I wanted to do high end gaming. Overall though, there are two USB-C/Thunderbolt 3 ports, and you can use either one for charging, and real type-A USB 3.1 port, and this seems more than adequate for any external connection. The power supply is right out of Apple's playbook, with a collapsible plug interchanged with a grounded cable.

As I said, the fingerprint scanner is along the right side, where you would naturally hold the laptop by the sides. There's a volume rocker switch there, too. The left side has the power button, which lights with an LED when it's on or in sleep mode, and there's a headphone jack that supports the usual 4-conductor headphone-with-microphone device.

Like most laptops, there are fans that will crank up when you start demanding a lot from the CPU or GPU. During the course of usual web use, video viewing and Outlook or Word use, they don't come on. The machine stays pretty cool to the touch. Start installing software or doing something CPU-intensive, and yes, the fan noise will be audible.

The keyboard has really nice key travel, about on par with my 2013 MacBook Pro, and the backlighting is similarly good. What I love having as a developer is an insert key. If you use Resharper in Visual Studio, you know why this is great from a keyboard shortcut standpoint. The track pad is super smooth and great, even though I can't tell what it's made of. As I mentioned, it has real clicks (as opposed to the haptic thing), and it does all of the usual gestures. It might be a setting I haven't found yet, but it doesn't do the "inertial" scrolling that the Mac does. Only sometimes does that feel weird, but it would definitely feel complete if it did that.

This is a touch screen model, with a 4K screen. At my last job, I had a Dell with an HD (1920x1080) screen at 15", and while it was a great screen, there's something to be said for the higher pixel density of Apple's "retina" screens, and this panel at 3840x2160. It reminds me of the invisible pixels on better phones of the last 6 years or so. It's better than standard HD, and I can feel it. I can't tell the difference between it and my 13" MacBook Pro, but the rendering and typography on Windows definitely benefits from a higher resolution. The higher resolution comes at a battery cost, and whatever it is, it's worth it. Also, being able to touch the screen trumps Apple's silly touch bar thing, and not just because you get to keep your function keys at all times (another developer thing, I'm sure).

So what about that tablet mode? I have a Surface Pro 3, which is a remarkable piece of engineering. To rip off the keyboard and have a real tablet is great. But as a PC, it's awkward. The kickstand works, but not so much if you're not at a desk or table. It's "lapability" isn't great. So as a hardware designer, you have to ask yourself: What are the hardware use case proportions, and should we design for the majority scenario? I think I probably have used my Surface Pro in tablet mode 3% of the time, and even then with a pen. I love it, but it's a rare scenario. The HP allows you to fold the screen back to make a (relatively) chunky tablet to touch or draw on with the included pen. This is ideal, because it's the exception use case, not the rule. I love to sketch out a UI with the pen in OneNote, but I'd rather have something chunky for that rarity than a laptop that doesn't sit on my lap well.

As a side note, I haven't used it as a "tent" to Netflix and chill or whatever, but when traveling, I could see why that would be awesome.

The stock BIOS settings suck, but you can roll with that. Until you mess with them, it won't show your time remaining for the battery in Windows. There were other things as well that I don't remember, but the only real trick was knowing you had to jam the ESC key to get the menu to do F10 to change the settings. You'll figure it out.

This comprooder has not only the fingerprint sensor, but also an infrared camera that enables Windows Hello, the mechanism that allows you to login with your face. Holy crap, it's awesome. Open the computer after sleeping, and it flashes its infrared lights at you, and you're logged in because you have pretty blue eyes like me. If I'm being honest, this is not any more remarkable or convenient than the fact that I'm already picking up the computer by the sides and putting my finger where the fingerprint sensor is, but it's still pretty cool. I know iPhones do this as well (years after Windows computers), but at least in the Pixel world, I'm picking the phone up and it's authenticating me where my finger already is before the screen even comes on.

Battery life is hard to peg down so far, but I'm assuming that for basic non-developer work, you could probably get 10 hours out of it. For developer work, with multiple instances of Visual Studio open, doing "developer stuff," I'm guessing 8 hours. I just haven't put together enough continuous hours to have a good sense of things yet.

Software

OK, so this isn't typical, but I had to blow away the Windows install and do my own. No offense, HP, but I just don't know what all that shit you loaded in there does, or why I need it. Sure, there are about a dozen driver packages to install from HP, and a BIOS update that makes thermal management a little less noisy, but once I got there, the clean Windows install was good. The slightly annoying thing for me was getting Windows to accept an MSDN license key for Windows 10 Pro instead of Home, which I wanted so I could enable Bitlocker (it encrypts the drive). It took several tries.

Actually, it took a day of dicking around with Windows to make everything work as expected. In the old Mac world, this was rarely an issue because once you have a Windows VM setup the way you like it, you don't have to do anything ever again. You just copy it from machine to machine. It also doesn't have to deal with drivers and exotic stuff that varies from computer to computer. Windows still does some infuriating stuff and buries settings all over the place. For example, I changed a group security policy, so I could delete anything regardless of file ownership, and this broke setting up Windows Hello (biometric) logins. WTF? Why are they even related?

This is kind of a hardware issue too, but I think that Windows' Bitlocker tech is pretty great. Essentially it uses your login information, in combination with a TPM (trusted platform module) chip in the computer to encrypt all of your shit. Sure, the Mac kind of has this as well if you set it up, but this is full-on encrypt everything, including the OS outside of your home folder, and you can unlock it with your cloud-based Microsoft account.

Windows still is kind of messy to mess with compared to MacOS. I imagine that 80% of people don't need to mess with it, but I still find myself going into various management consoles to do stuff. My darling wife points out that this is probably not most people, and most people would be just fine not doing a fresh install of Windows. She's probably right. But even in my VM's on MacOS, sometimes I would have to go in and mess with some obscure thing just to roll with daily use. I think objectively, MacOS is a better platform for John Q. User, but it too has compromises. Security is probably better on Windows if you use Bitlocker (never thought I'd say that).

Development

I make software for a living. Or as my coworkers would suggest, I develop in Outlook and Visio. (Jerks.) In any case, I spend a lot of time using tools like Visual Studio, SQL Server Management Studio and things that simulate cloud environments like Azure Storage Simulator or Redis cache (fired up through the Windows Linux Subsystem running Ubuntu). So far, the most I've pushed is 10 gigs of memory, but this illustrates why 16 is better than 8.

Building is fast and furious, as is loading a big solution with lots of projects. Having Resharper running with Visual Studio doesn't seem to exact any penalty, which is a nice feeling, and frankly not one I've had on my other computers recently.

The keyboard and track pad feel right, with good travel on the keys and enough room to swipe around. More importantly for a developer, the function keys are all actual physical keys, and there's a BIOS setting to make them default to F keys, requiring the Fn modifier when you want to use the hardware functions (brightness, volume, and such). There's also an insert key, which is used by default in a lot of keyboard shortcuts.

Windows typography choices aren't great, though some of this is a function of screen resolution. Old school, low density monitors make text look chunky regardless of font, but you can see a difference between MacOS and Windows. A 15" laptop running HD (1920x1080) makes this better, but it's still not great. However, put four times as many pixels on a 13" screen, and the text looks painted on regardless of font. Consolas never looked so good, the way it does in UHD.

Conclusions

The big story here starts with price. I found it on sale for $1,400, which is an extraordinary deal for something so well designed with high specs. It doesn't have killer 3D hardware, but that probably doesn't matter if you're not a gamer. Dell, Acer and the like can compete sometimes, but even then, HP's design is still better. (Dell: When will you listen to everyone telling you not to put a camera at the bottom of the screen, looking up noses?) And Apple, we know they operate on enormous margins, but the new MacBook Pros aren't $900 better. They're not better at all if you go on specs.

Windows 10 is a lot better than it was, but sometimes the tablet-laptop straddle is an awkward thing in software. In hardware terms, this makes for a chunky tablet, but as the secondary use case, it's more than workable. For those few times a year where I start sketching out stuff, this is more than adequate. For the rest of the time, it succeeds where my Surface Pro 3 did not: On my lap.

So far, so good. I'm really happy with this new shiny thing.

EDIT, 2/16/18: After almost two weeks, I can confirm that battery life does vary wildly based on the use cases, but general web stuff, email, Slack and such will definitely get you through 8 hours, maybe 10 or more. Screen brightness has a lot to do with it. In low-light evening work, 40% is way more than adequate. You really only need 100% if you're out in bright sun or are obsessed with seeing the "painted on" nature of text on this amazing screen. For dev work, I think I could probably get close to 8 hours, depending on how often I had to fire up the debugger, but not if I were out in bright sun. And if you're asking why I would be, come on, I live in Central Florida!

The end of a 12-year-run with Mac laptops

posted by Jeff | Friday, February 2, 2018, 9:21 PM | comments: 0As you might expect, I had been around Macs as early as college, when we had one in our residence life staff office in the hall where I was an RA (a Mac SE, I think). In my first TV job, I got to buy one for use as a video editor. Some years later, Stephanie bought an iBook, when the stopped making them colorful. I didn't buy one myself until 2006, when Apple flipped over to Intel CPU's. Prior to that, I had four other laptops, the only one of which that didn't suck was a Sony (that cost a remarkable $2,500 in 1999, and I can't believe I spent that much). That first Intel Mac was the best I ever had to date, and was the first of many, though it was not without its problems.

- 2006: First Intel MacBook Pro. About 18 months in, I had to have the entire logic board replaced, at my expense, around $150 I think.

- 2008: A 17" model, when they started doing the machined aluminum. It was huge, but awesome. Two years later, I swapped out the optical drive for an SSD, which breathed new life into it. The battery lasted forever. Sold it to a friend, and I think he's still using it.

- 2010: Diana got a 13" for her birthday. I don't think that one ever had an issue, and we sold it four years later.

- 2012: Tried to buy the new Retina 15" machine. It was crazy fast and wonderful, until the screen burn-in started. Seeing the ghost of a previous UI when working on another one got old pretty fast. Returned it for a replacement that had the same problem. Eventually gave up and returned that one too.

- 2012: A 13" MacBook Air, which while lacking the pretty screen, was wonderfully light. After only 500 charge cycles, the battery capacity dropped to unusable levels and had to be replaced at three years, for about $180. Diana uses it now, and the battery is at 87% after 600 cycles.

- 2014: A 13" MacBook Pro. Bought this one because I desperately needed more SSD space so I could have more than one Windows VM. Still in pretty good shape, but 8 gigs of RAM isn't enough anymore when I'm running lots of different things in a VM on only 6 gigs.

And that brings us to now, when I've found that getting another Mac laptop is financially insane. When I bought the last one, if you wanted a good laptop with the specs I got, the pricing was pretty much the same across the board, only the other manufacturers had crappy designs. What I call the "Surface influence," the act of Microsoft making really nice, thin hardware with the Surface Pro, hadn't really made an impact yet. Bit for bit, Apple still had the best stuff with very little premium paid for it being from Apple.

What a difference four years makes. HP, Dell, Lenovo, Acer and the like all make really nice hardware. It's not that the Macs are less nice, because they're still pretty great (if generally behind a CPU release cycle for extended periods of time). It's just the price difference. I ended up getting HP's Spectre x360, with its nutty flipping screen for when you want to use it as a tablet, for $1,400. That's the latest quad-core CPU, 16 gigs of RAM, a 512 gig SSD, under three pounds with a 4K touch screen and insane battery life. The equivalent MacBook Pro, which would still be behind a generation of CPU's and not have the great screen, would cost $900 more.

Yes, I have to deal with some of the weirdness that is Windows (I immediately flattened the computer with a clean install), and I do love the general typography and ease of use of MacOS, but they've really blown the value curve for me. Congrats, HP... what a huge difference compared to the awful piece of crap I bought from you 14 years ago!